External AI risk isn’t hypothetical. It’s accelerating, and cyber criminals are already putting these tools to work in ways most SMBs aren’t prepared for.

Last week, we covered internal AI risk: Shadow AI, data exposure, and employees using AI with the wrong expectations. If you missed it, you can catch it here: [insert link].

This week, we’re shifting to the second side of the coin: external AI risk.

Cyber criminals. New attack vectors. And social engineering that would have seemed impossible not long ago.

We’ll wrap the series next week with what to do about all of this without reinventing the wheel.

At BSN, we’ve talked for years about the endlessly increasing ingenuity of the cyber criminal 😨

The cat-and-mouse game hasn’t changed. Criminals find new tactics, defenders react, and the cycle continues. What has changed is the power AI gives attackers.

Just like AI has made me better at my job over the last three years, it’s also made cyber criminals better at theirs.

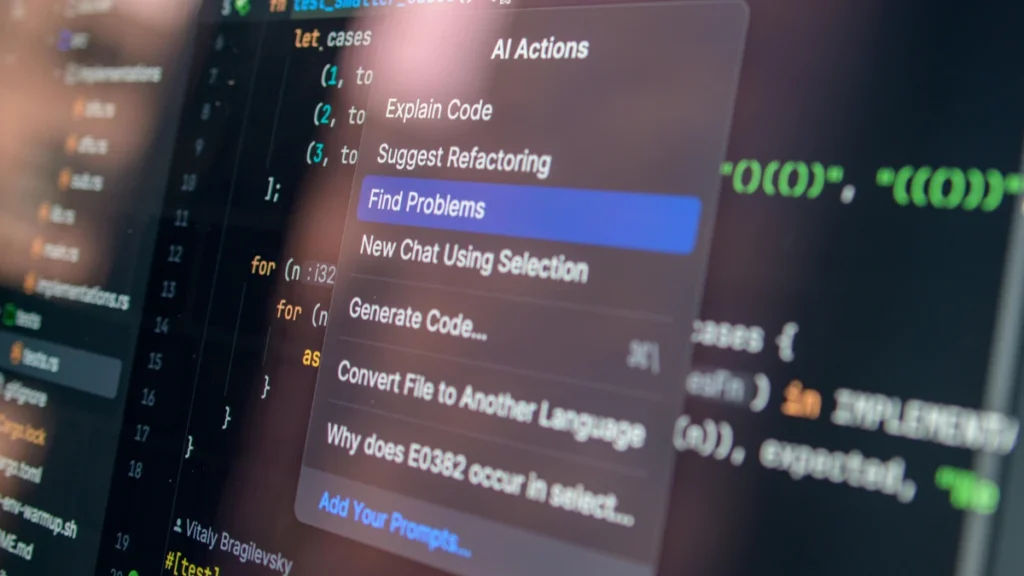

I’m not going to dive into technical exploits today. We’re not talking about AI-written malware, agentic attack chains, or future encryption-breaking scenarios.

As always at BSN, we focus on the most vulnerable layer: the everyday employee 🙂

For ten years, we’ve helped turn employees into a superhuman line of defense. But that job keeps getting harder, and AI adds new layers of complexity.

Employees know the basics.

Check the sender, links, attachments, and message (SLAM). Don’t click suspicious links. Double-verify financial requests.

But AI changes the game.

Grammar Mistakes Are Gone

For years, grammar errors were an easy phishing indicator. Many attacks came from non-native speakers, and it showed.

AI removes that advantage.

Attackers can now generate near-perfect grammar in seconds. We’ve seen a significant drop in reported phishing attempts that contain obvious grammar mistakes.

That means even simple phishing emails are more convincing than ever.

AI-Powered Spear Phishing

In the past, researching a single target could take hours. Now AI can automate that research at scale.

Social media. Websites. Public data. Even compromised data sources.

What used to take hours for one person can now happen in minutes across hundreds or thousands of targets.

Recent research shows a 60% year-over-year increase in global phishing attacks, driven in large part by AI-generated content that allows attackers to operate at greater scale and sophistication than before. (Source: Zscaler ThreatLabz)

https://www.zscaler.com/press/zscaler-research-finds-60-increase-ai-driven-phishing-attacks

Phishing emails will be better, more targeted, and more frequent. Some will still get through even the best filters.

Research also shows that AI-generated spear-phishing is now matching or exceeding the effectiveness of human-crafted attacks, making these emails more convincing and more dangerous for employees who aren’t prepared. (Source: SecurityWeek) https://www.securityweek.com/ai-now-outsmarts-humans-in-spear-phishing-analysis-shows/

But phishing is only the beginning.

Deepfakes and Impersonation

This is where employee expectations matter just as much as they did with internal AI risk.

If you’ve experimented with AI-generated video or audio recently, you’ve seen how real it’s getting.

What’s scary isn’t just how good it is today. It’s how fast it’s improving. Progress is measured in months, not years.

That’s a goldmine for cyber criminals.

In early 2024, a finance employee at a multinational company was convinced to transfer roughly $25 million after participating in what appeared to be a legitimate video call with company executives. The meeting looked real. The voices sounded right. The faces matched. The problem was that every other participant on the call was an AI-generated deepfake. The employee wasn’t careless. They were following what looked like a normal executive request, reinforced by familiar faces and voices.

If employees don’t understand what these tools can do, they won’t question what they’re seeing or hearing, even when something feels off.

MSP Talking Points: External AI Risk in the Real World

- AI is amplifying phishing at scale. Organizations are seeing a 60% year-over-year increase in phishing attacks, fueled by AI-generated content that makes attacks faster to produce and harder to detect. (Source: Zscaler ThreatLabz) https://www.zscaler.com/press/zscaler-research-finds-60-increase-ai-driven-phishing-attacks

- AI-generated phishing is outperforming traditional methods. Research shows AI-assisted spear-phishing campaigns are now matching or exceeding the effectiveness of human-crafted attacks, making them more convincing and more dangerous for employees who aren’t prepared. (Source: SecurityWeek) https://www.securityweek.com/ai-now-outsmarts-humans-in-spear-phishing-analysis-shows/

- Deepfake impersonation is already causing real financial loss. In early 2024, scammers used AI-generated video and audio to impersonate executives during a video conference, convincing a finance employee to transfer over $25 million. The employee believed the request was legitimate because the faces and voices matched people they knew. (Source: SC Media) https://www.scworld.com/news/deepfake-video-conference-convinces-employee-to-send-25m-to-scammers* https://www.zscaler.com/press/zscaler-research-finds-60-increase-ai-driven-phishing-attacks

MSPs need to talk about both sides of AI risk. Internal and external. Tooling alone won’t solve this. There’s no technical patch that fixes human risk.

In Part 3, we’ll walk through how to mitigate AI risk while still embracing AI for productivity.

And no, this isn’t about blocking all AI. There’s a better way.

And if you’re like me and hate cliffhangers, our AI go-to-market team can help you start working through this with clients right away.

Now Available: Gen AI Certification From BSN

Lead Strategic AI Conversations with Confidence

Breach Secure Now’s Generative AI Certification helps MSPs simplify the AI conversation, enabling clients to unlock the value of gen AI for their business, build trust, and drive growth – positioning you as a leader in the AI space.